You are not logged in.

- Topics: Active | Unanswered

#1 2022-12-22 15:07:45

- aluma

- Member

- Registered: 2022-10-26

- Posts: 646

Building a standard kernel for a specific computer.

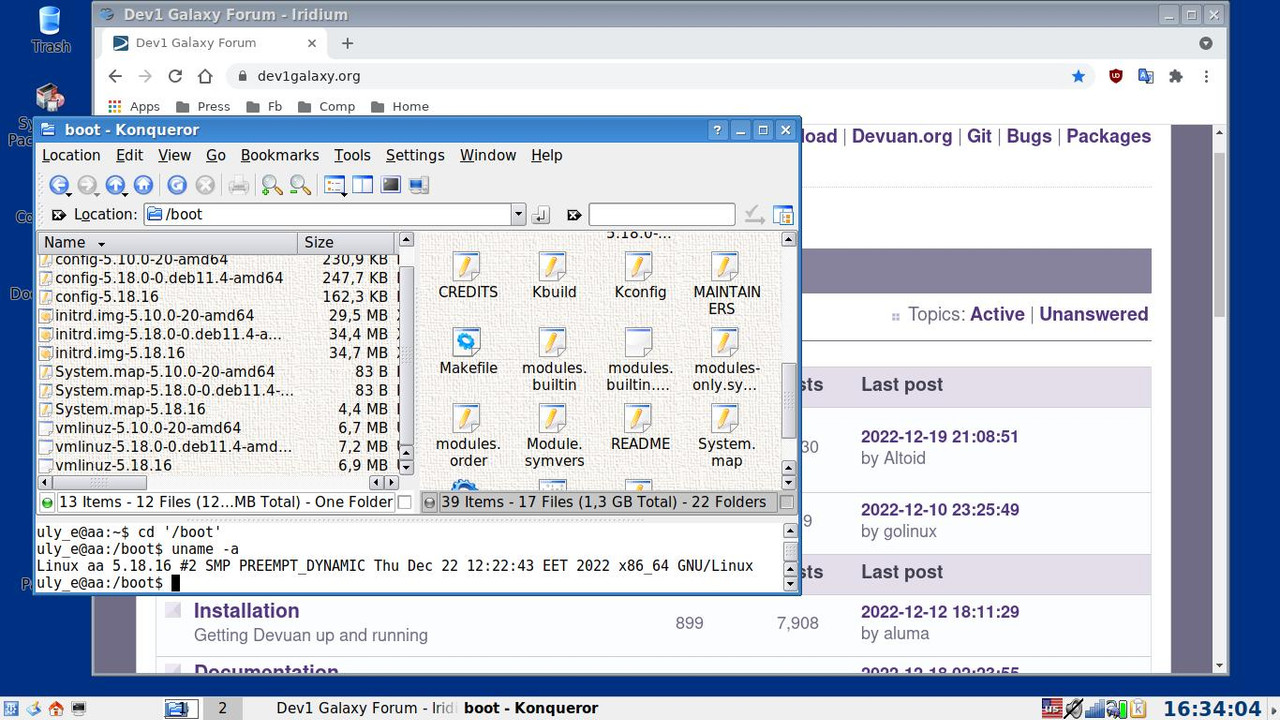

A small illustration to my proposal to do kernel optimization.

There is a wonderful assembly thread

https://dev1galaxy.org/viewtopic.php?id=564

But our task is a little different, github is not needed.

The algorithm is this:

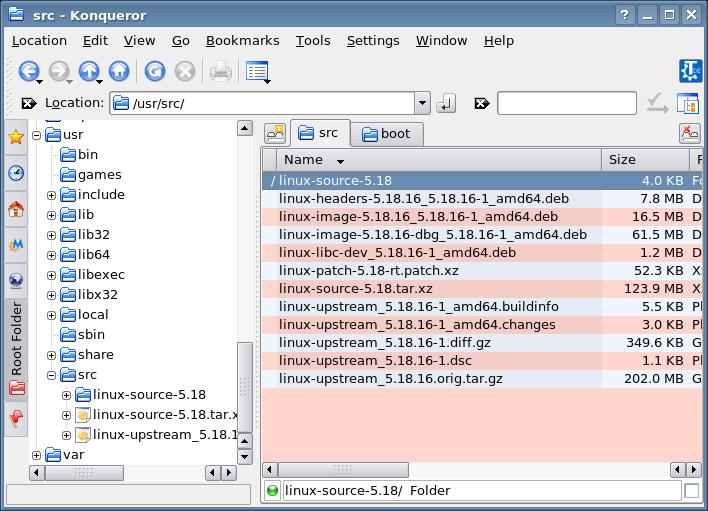

Install the corresponding package linux -source-xxx. unpack it.

apt-get build-dep linuxFurther commands with root rights in the folder /usr/src/linux -source-xxx

make localmodconfig

make menuconfigSelect / remove the necessary / unnecessary

make -j 6

make modules_prepare

make modules_install installWe reboot.

Here is the result without apparmor, TOMOYO and other things without much fanaticism.

Offline

#2 2022-12-22 17:12:28

- Head_on_a_Stick

- Member

- From: London

- Registered: 2019-03-24

- Posts: 3,125

- Website

Re: Building a standard kernel for a specific computer.

Would it not be better to build a .deb package? Instructions here:

https://www.debian.org/doc/manuals/debi … n-official

And this will use all of the cores for any machine:

# make -j$(nproc)Brianna Ghey — Rest In Power

Offline

#3 2022-12-22 18:35:05

- aluma

- Member

- Registered: 2022-10-26

- Posts: 646

Re: Building a standard kernel for a specific computer.

@Head_on_a_Stick

I did not set out to write a complete instruction.

Of course, you can create a package, in the "HOWTO" at the link there is an example of a command.

In general, compiling the kernel is an occupation for a more or less qualified user with his own preferences.

Thanks for the make command tip.

I didn't even think about that.

PS. One caveat - this case requires hard drive space. The initial 4.5 GB was not enough for me, I had to use gparted-live usb.

Last edited by aluma (2022-12-22 18:36:34)

Offline

#4 2022-12-23 12:24:12

- blackhole

- Banned

- Registered: 2020-03-16

- Posts: 194

Re: Building a standard kernel for a specific computer.

Would it not be better to build a .deb package?

Unless you're planning to distribute it, and/or are maintaining your own repository and serving the kernel as updates to multiple systems which you administer, there's actually little point to packaging every kernel rebuild as a deb.

If you're maintaining your own kernel for personal use - i.e. doing a git pull, rebuilding and installing, etc, it's far more expedient to just use make.

Last edited by blackhole (2022-12-23 12:24:40)

Offline

#5 2022-12-24 10:15:55

- Head_on_a_Stick

- Member

- From: London

- Registered: 2019-03-24

- Posts: 3,125

- Website

Re: Building a standard kernel for a specific computer.

there's actually little point to packaging every kernel rebuild as a deb

What about all of the patches Debian apply to their kernel? And why would the kernel be any different to any other software? I wouldn't dream of using plain make install, even if a decent uninstall target is provided in the Makefile. It just seems so... crude to not use the provided packaging tools.

Although having said that Debian's packaging is so ridiculously complicated compared to, for example, Arch or Alpine that I can see why you might want to avoid it :-)

Brianna Ghey — Rest In Power

Offline

#6 2022-12-24 13:30:48

- Evenson

- Member

- Registered: 2022-09-08

- Posts: 58

Re: Building a standard kernel for a specific computer.

blackhole wrote:there's actually little point to packaging every kernel rebuild as a deb

What about all of the patches Debian apply to their kernel? And why would the kernel be any different to any other software? I wouldn't dream of using plain make install, even if a decent uninstall target is provided in the Makefile. It just seems so... crude to not use the provided packaging tools.

Although having said that Debian's packaging is so ridiculously complicated compared to, for example, Arch or Alpine that I can see why you might want to avoid it :-)

Custom kernels are something of a personal nature. Debian provides mostly vanilla built kernels. The same reason i would not deb package a dwm or dmenu binary with my own configs, its much easier to use make, build-essential and tcc. I f i were a package maintainer for devuan or debian it would be a different story.

"A stop job is running..." - SystemD

Offline

#7 2022-12-24 19:54:47

- Head_on_a_Stick

- Member

- From: London

- Registered: 2019-03-24

- Posts: 3,125

- Website

Re: Building a standard kernel for a specific computer.

I always build my own packages for dwm & dmenu. I even made a live ISO for my custom dwm desktop ![]()

Brianna Ghey — Rest In Power

Offline

#8 2022-12-24 19:58:29

- aluma

- Member

- Registered: 2022-10-26

- Posts: 646

Re: Building a standard kernel for a specific computer.

What about all of the patches

This is the whole intrigue! ![]()

Package linux-source - two archives, sources and a patch. Next is your decision.

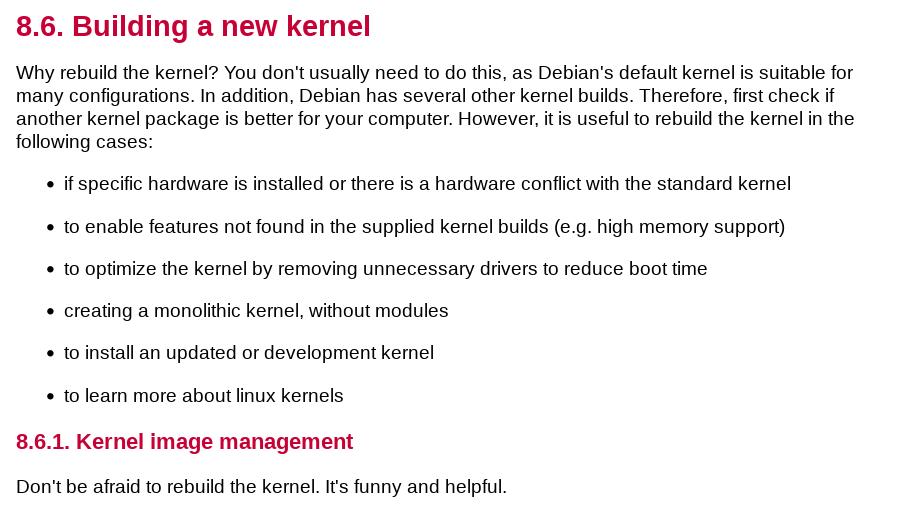

Here they say that kernel compilation is funny.

Absolutely correct.

For me, in the dialup days, it was a common procedure to get a new release in the form of a set of CDs. By that time, I had already played enough computer games and this activity was no less interesting.

P.S. Regarding make -j .

The question is not in the number of cores, but in the sequence of compilation of various parts. With a large number of tasks, errors are possible when some are compiled before the necessary ones are ready.

In my case with AMD E-300 j=4.

The example in the first post was just copied from "HOWTO" .

Last edited by aluma (2022-12-24 20:33:30)

Offline

#9 2022-12-28 16:27:17

- aluma

- Member

- Registered: 2022-10-26

- Posts: 646

Offline

#10 2022-12-28 17:49:59

- Head_on_a_Stick

- Member

- From: London

- Registered: 2019-03-24

- Posts: 3,125

- Website

Re: Building a standard kernel for a specific computer.

P.S. Regarding make -j .

The question is not in the number of cores, but in the sequence of compilation of various parts. With a large number of tasks, errors are possible when some are compiled before the necessary ones are ready.

Utter nonsense.

Last edited by Head_on_a_Stick (2022-12-28 18:01:54)

Brianna Ghey — Rest In Power

Offline

#11 2022-12-28 18:56:06

- aluma

- Member

- Registered: 2022-10-26

- Posts: 646

Re: Building a standard kernel for a specific computer.

aluma wrote:P.S. Regarding make -j .

The question is not in the number of cores, but in the sequence of compilation of various parts. With a large number of tasks, errors are possible when some are compiled before the necessary ones are ready.Utter nonsense.

Too lazy to argue and look for links.

Here at hand

"#make

This will build the kernel image you will install later. You can use -jn as a make argument, where n will be the number of CPU cores in your system + 1 in order to enable parallel building which, of course, will speed up the process."

https://linuxconfig.org/in-depth-howto- … figuration

P.S. I would venture to suggest that you check this case practically - compile the kernel with different values of -j . With your computer, this will not take long, unlike in my case.

P.P.S. In any case, the kernel compiled for a specific computer boots and runs faster than the standard one.

Practically, this means that you can use "human" DEs, such as Trinity or XFCE, rather than looking for "minimal" command-line interface-oriented ones.

But programmers have their own opinion, they don't care about users. ![]()

Last edited by aluma (2022-12-28 20:54:01)

Offline

#12 2022-12-30 18:32:35

- aluma

- Member

- Registered: 2022-10-26

- Posts: 646

Re: Building a standard kernel for a specific computer.

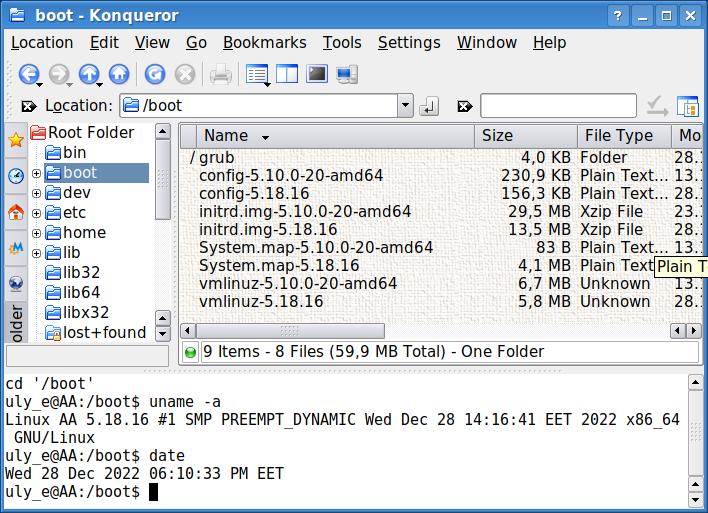

The number of "parrots" of the hardifo GPU test

- standard kernel (5.10...., 5.18...) 1547

- custom kernel 1605.

Offline

#13 2023-10-23 09:35:28

- aluma

- Member

- Registered: 2022-10-26

- Posts: 646

Re: Building a standard kernel for a specific computer.

Daedalus.

The result of building the linux-image-6.1.xx kernel for Lenovo s205.

localhost:/home/uly_15.3/Downloads/Dist/Deevuan # ls -lh

total 6.5M

-rw-r--r-- 1 root root 0 Oct 23 12:29 ls

-rw-r--r-- 1 uly_15.3 users 6.5M Oct 22 16:29 vmlinuz-6.1.55s205

localhost:/home/uly_15.3/Downloads/Dist/Deevuan #

Another question is that for this you need to have a modern computer.

On a machine with a Core 2Duo E8400/4Gb, compilation takes 3 hours

Last edited by aluma (2023-10-23 09:37:05)

Offline

#14 2023-10-24 09:55:31

- PedroReina

- Member

- From: Madrid, Spain

- Registered: 2019-01-13

- Posts: 295

- Website

Re: Building a standard kernel for a specific computer.

Another question is that for this you need to have a modern computer.

On a machine with a Core 2Duo E8400/4Gb, compilation takes 3 hours

My first custom kernel needed all night to compile on a 486 with 16 MB of RAM ![]()

Offline

#15 2023-10-24 11:10:56

- Altoid

- Member

- Registered: 2017-05-07

- Posts: 1,977

Re: Building a standard kernel for a specific computer.

Hello:

... first custom kernel needed all night to compile ...

I have many times thought about the possibility/convenience/need of doing such a thing but always came up, for some reason or other, short of arguments.

Not that I did not have enough of them, what I did not and do not have is previous experience or time.

I also adhere to the if it works, leave it be philosophy.

But being a stubborn tinkerer, I have a rather bad track record on that account.

My box is a Sun MS Ultra 24 WS with an Intel Q9550+16Gb RAM, SLI SAS1068E SAS controller and a pair of Nvidia Quadro FX580 cards to drive three 19" monitors, two of them Samsung SyncMaster 940N. There is also an Adaptec 2940UW SCSI controller for my Umax S-6E scanner and a PCIe USB 3.0 PCIe card.

All the hardware listed above is ranked as old or ancient (depending on who is talking) but my take is that if it works as expected, it is neither old nor ancient.

Look at the Voyager probe ...

That said, I expect that save some event that kills the mb, I should not need to undertake any hardware upgrading, save maybe the SAS controller and drives which are SAS-1/3.0 Gbit/s a couple of years from now when I can justify getting that hardware because of a good bargain.

But I see that at some point in the future, an always fast approaching horizon in IT, it may be a good idea to churn out a slimmed down/monolithic/dedicated kernel for my box, with just what its hardware needs to run.

My main doubt with respect to that is the eventual updating/upgrading of the kernel.

How does that work? Does the kernel have to be compiled everytime?

I'd appreciate some insight into this aspect of the kernel building issue.

Thanks in advance.

Best,

A.

Offline

#16 2023-10-24 16:06:46

- aluma

- Member

- Registered: 2022-10-26

- Posts: 646

Re: Building a standard kernel for a specific computer.

@Altoid

Hello!

Yes, when updating the kernel you need to compile a new one. Therefore, if a standard kernel is sufficient for your tasks, there is no point in compiling.

The most time-consuming task is the initial editing. There are more than 5000 lines in the file, about the same number of questions that need to be answered correctly.

Then it’s easier, just use this configuration file of yours.

My “couch surfer” Lenovo s205 is AMD 300/4Gb, even a slight improvement with a custom kernel is noticeable. Well, kind of fun...

Regards.

Offline

#17 2023-10-24 18:07:11

- PedroReina

- Member

- From: Madrid, Spain

- Registered: 2019-01-13

- Posts: 295

- Website

Re: Building a standard kernel for a specific computer.

My main doubt with respect to that is the eventual updating/upgrading of the kernel.

Nowadays distibutions kernels boast all the range of modules ready to install, so I don't see why a custom kernel is required anymore. Last time I did I was still young ![]()

Anyway, sometimes you need to compile your kernel tailored to your hardware at hand. As aluma said, you have to answer a lot of questions, but only if you configure from scratch. You can shortcut using a config file to start with. When upgrading, use your own config file again to recompile.

It was not much trouble, and it was trendy when I did. ¡YMMV!

Offline

#18 2023-10-25 09:18:04

- Altoid

- Member

- Registered: 2017-05-07

- Posts: 1,977

Re: Building a standard kernel for a specific computer.

Hello:

... kernel you need to compile a new one.

I see ...

I understand that this would be for a major update.

eg: 4.9 -> 5.0

Would it also be the same for minor ones?

eg: 4.9x -> 4.9y?

... don't see why a custom kernel is required anymore.

Only thought about it for the reasons in my OP.

Tailored to the specific hardware in my box because eventually the kernel will have more unneeded modules than needed ones.

Thank you both for your input.

Best,

A.

Offline

#19 2023-10-25 13:40:34

- aluma

- Member

- Registered: 2022-10-26

- Posts: 646

Re: Building a standard kernel for a specific computer.

Would it also be the same for minor ones?

eg: 4.9x -> 4.9y?

Yes, you'll have to rebuild.

The versions are different, here in "news" you can see the changes

https://tracker.debian.org/pkg/linux

Regards.

Last edited by aluma (2023-10-25 13:41:01)

Offline

#20 2023-10-26 08:45:52

- PedroReina

- Member

- From: Madrid, Spain

- Registered: 2019-01-13

- Posts: 295

- Website

Re: Building a standard kernel for a specific computer.

... because eventually the kernel will have more unneeded modules than needed ones.

In my opinion, unneeded modules don't harm the system, just take up space on disk. I even do think (never did) that they can be deleted without issues if you know what you are doing.

Offline

#21 2023-10-26 09:30:56

- Altoid

- Member

- Registered: 2017-05-07

- Posts: 1,977

Re: Building a standard kernel for a specific computer.

Hello:

... you'll have to rebuild.

Right.

... don't harm the system, just take up space ...

Yes, that was not a problem.

What I was thinking was that the system would be nimbler.

Thank you both for your input.

It would seem that I'll keep using the standard kernel.

Best,

A.

Offline

#22 2023-10-26 11:43:19

- aluma

- Member

- Registered: 2022-10-26

- Posts: 646

Re: Building a standard kernel for a specific computer.

In my opinion, unneeded modules don't harm the system, just take up space on disk. I even do think (never did) that they can be deleted without issues if you know what you are doing.

Agree.

The question is different - the size of the kernel and the unnecessary code in it. For example, support for Intel and AMD platforms is built into the kernel. It is clear that for a particular computer one of them is redundant. Etc...

Regards.

Offline

#23 2023-10-26 13:25:57

- greenjeans

- Member

- Registered: 2017-04-07

- Posts: 1,511

- Website

Re: Building a standard kernel for a specific computer.

What I was thinking was that the system would be nimbler.

A.

In my experience a smaller system devoid almost entirely of unneeded cruft is FAR nimbler and faster, I have gone so far as to test this assumption thoroughly in the past on multiple machines and I assure you it is so, though the effect is far more noticeable on older machines with lower specs.

I used to know a guy who was way into Gentoo, and he always said I would never know what real speed was until I built a Gentoo system for a specific machine.

Too much work though, lol, for this lazy ol country boy. ![]()

BTW, thanks to everyone in this thread, this is a very interesting topic for me, have never compiled a custom kernel before but I think i'd like to give it a go in the future. I do love a small fast system. Have to spend some time today converting SVG icons into PNG's on some new programs i'm using, SVG's really bog down the main menu when you use icons in addition to the program name in a lower spec machine.

Last edited by greenjeans (2023-10-26 13:33:16)

https://sourceforge.net/projects/vuu-do/ New Vuu-do isos uploaded December 2025!

Vuu-do GNU/Linux, minimal Devuan-based Openbox and Mate systems to build on. Also a max version for OB.

Devuan 5 mate-mini iso, pure Devuan, 100% no-vuu-do. ![]() Devuan 6 version also available for testing.

Devuan 6 version also available for testing.

Please donate to support Devuan and init freedom! https://devuan.org/os/donate

Offline

#24 2023-10-27 10:19:56

- mirrortokyo

- Member

- Registered: 2021-04-08

- Posts: 74

Re: Building a standard kernel for a specific computer.

I build custom kernels at least for each release candidate and release, using a script like:

MAKEFLAGS='HOSTCC=gcc-13 CC=gcc-13' make -j7 menuconfig bindeb-pkg

One can remove drivers for hardware not used and filesystems and protocols not used and speed up the build process considerably. (One slow machine gets its kernel rebuilt without netfilter support to reduce the build process significantly).

If something breaks, one can still have alternative kernels to boot into and report problems to the maintainers.

Offline

#25 2023-10-27 12:45:46

- aluma

- Member

- Registered: 2022-10-26

- Posts: 646

Re: Building a standard kernel for a specific computer.

I'm a regular user, not a programmer.

It takes more time to create the .config file, developers make their work easier, there are many items that are needed to display debugging information, etc.

For me, it's almost like deciphering hieroglyphs. Kdif helps, indicating the boundary in the files, and the

mrpropercommand, which returns the source codes to their original state.

This is my final version for Dedalus (vmlinuz-6.1.55-s205, initrd.img-6.1.55-s205)

uly_e@AA:/boot$ ls -lh

total 68M

-rw-r--r-- 1 root root 254K Jul 27 20:28 config-6.1.0-10-amd64

-rw-r--r-- 1 root root 178K Oct 26 13:33 config-6.1.55-s205

drwxr-xr-x 6 root root 4.0K Oct 26 19:26 grub

-rw-r--r-- 1 root root 37M Oct 8 21:52 initrd.img-6.1.0-10-amd64

-rw-r--r-- 1 root root 14M Oct 26 17:02 initrd.img-6.1.55-s205

-rw-r--r-- 1 root root 83 Jul 27 20:28 System.map-6.1.0-10-amd64

-rw-r--r-- 1 root root 3.0M Oct 26 13:33 System.map-6.1.55-s205

-rw-r--r-- 1 root root 7.7M Jul 27 20:28 vmlinuz-6.1.0-10-amd64

-rw-r--r-- 1 root root 5.3M Oct 26 13:33 vmlinuz-6.1.55-s205

uly_e@AA:/boot$ uname -a

Linux AA 6.1.55-s205 #1 SMP PREEMPT Thu Oct 26 16:33:50 EEST 2023 x86_64 GNU/Linux

uly_e@AA:/boot$Offline